Timbre & Affect

Towards Mapping Timbre to Emotional Affect

≺Abstract≻

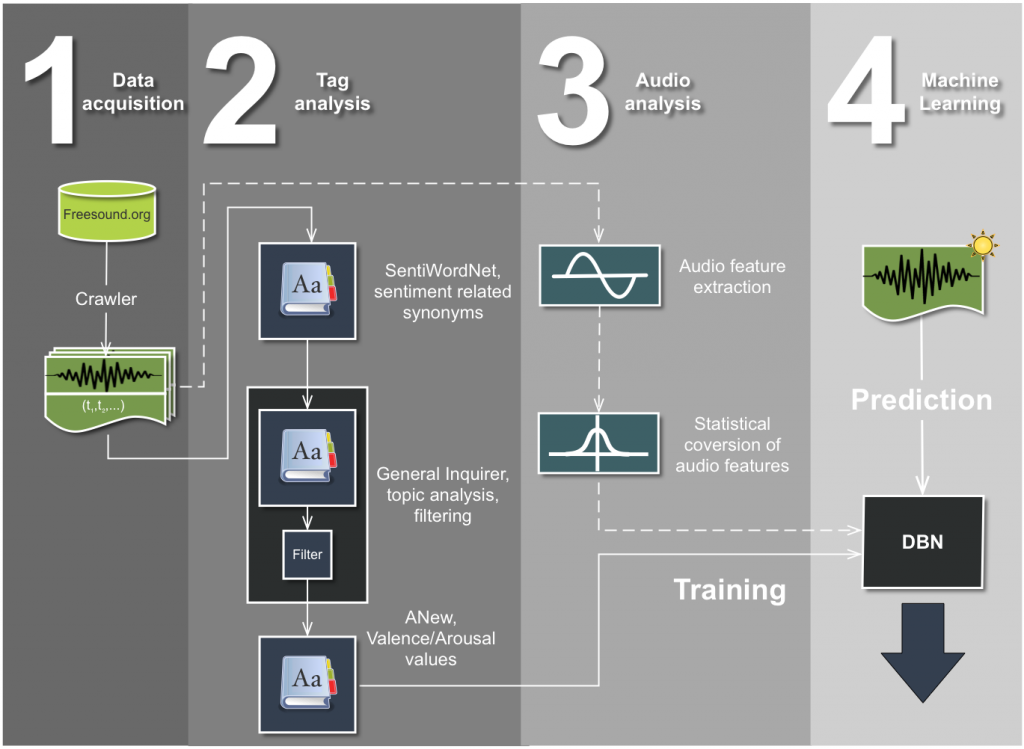

Controlling the timbre generated by an audio synthesizer in a goal-oriented way requires a profound understanding of the synthesizer’s manifold structural parameters. Especially shaping timbre expressively to communicate emotional affect requires expertise. Therefore, novices in particular may not be able to adequately control timbre in view of articulating the wealth of affects musically. In this context, the focus of this paper is the development of a model that can represent a relationship between timbre and an expected emotional affect. The results of the evaluation of the presented model are encouraging which supports its use in steering or augmenting the control of the audio synthesis. We explicitly envision this paper as a contribution to the field of Synthesis by Analysis in the broader sense, albeit being potentially suitable to other related domains.

≺Publication≻

“Towards Mapping Timbre to Emotional Affect” Authors: Niklas Klügel & Georg Groh Proceedings of the 13th International Conference on New Interfaces for Musical Expression, NIME’13, May 27 – 30, 2013, KAIST, Daejeon, Korea Paper Presentation

≺Code & Dataset≻

Not yet, please contact me if urgent.

☲☵☲